Project Gutenberg, started in 1971, is the oldest part of the modern free culture movement. Wikipedia is a relative upstart, riding on the wave of success of free software, extending the idea to other kinds of information content. Today, Project Gutenberg, with over 24,000 e-texts, is probably larger than the legendary Library of Alexandria. Wikipedia is the largest and most comprehensive encyclopedic work ever created in the history of mankind. It's common to draw comparisons to Encyclopedia Britannica, but they are hardly comparable works—Wikipedia is dozens of times larger and covers many more subjects. Accuracy is a more debatable topic, but studies have suggested that Wikipedia is not as much less accurate than Britannica as one might naively suppose.

Myth #2

"Even if you can do large things with bazaar methods, corporations are always going to do bigger and better work."

Unlike the previous myth, this one is largely unchallenged. Even inside the free culture community there is a strong perception of the community as a rebel faction embattled against a much more powerful foe. Yet, some projects challenge this world view!

Measuring Wikipedia

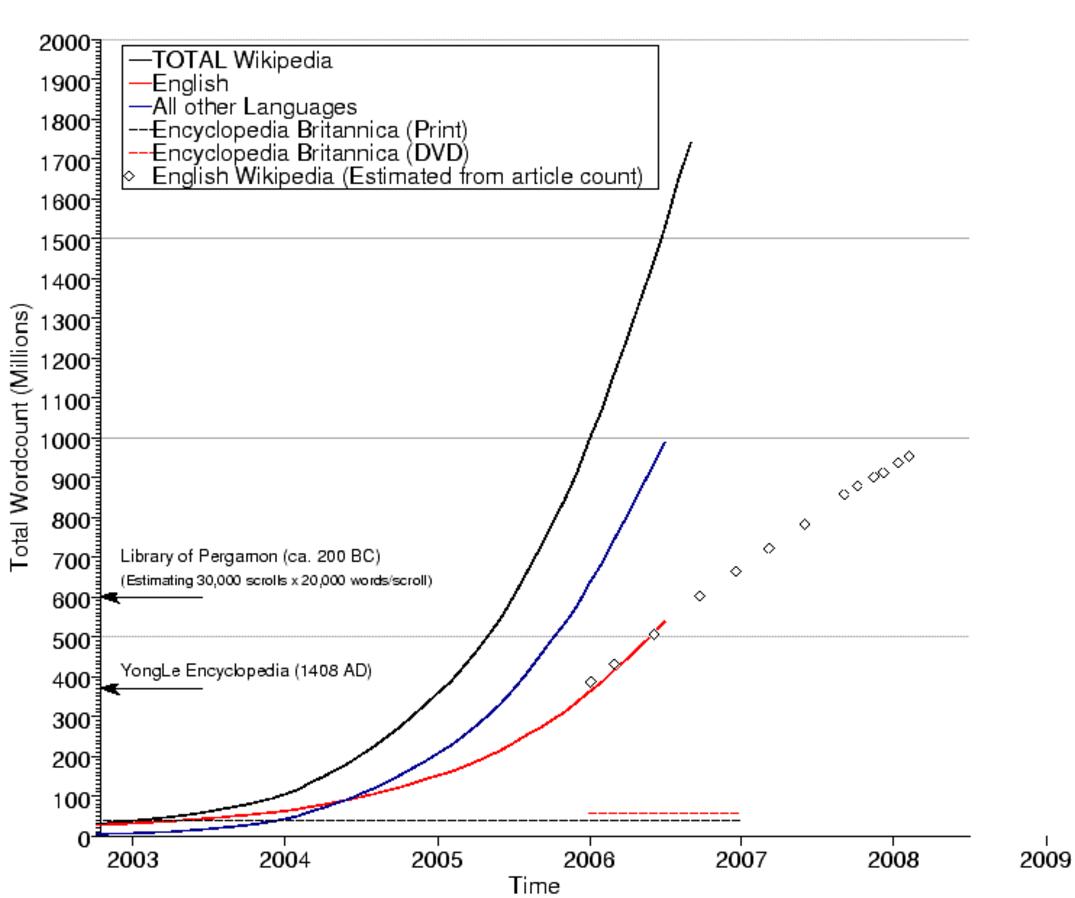

It's actually a bit hard to say what the exact size of Wikipedia is today, because the log engine that the site used to measure its size started to fail in 2006, due to the enormous size of the database! Since then, there is no direct data available on the total size of Wikipedia, nor on the English language version (the largest language version, unsurprisingly). There is data on some of the less highly populated language versions, simply because they haven't grown so large yet.

However, we can make some estimates based on the evidence before 2006 and the somewhat less complete statistics which continue to be available. 2006 was a pivotal year for Wikipedia, it was the year it surpassed the Yong-Le Encyclopedia, the former largest encyclopedic work ever created, commissioned by the Emperor of China in 1403 and so large it was only ever possible to make a single copy of it. It was bound into approximately 23,000 volumes, and unfortunately does not survive intact into the present day (there were after all, only two copies in existence).

It was also the year in which Wikipedia apparently finally transitioned from "exponential" to approximately "linear" growth, which can be regarded as an important maturation step. Instead of growing explosively, as it did in its first few years of existence, Wikipedia is now moving into a more sustainable growth pattern, with an increasing effort being put into improve the quality of existing articles rather than adding new (which is not to say that new articles aren't being written: the growth may be linear, but it's linear at something close to adding a whole new Yong-Le Encyclopedia per year!)

Wikipedia's growth may be linear, but it's linear at something close to adding a whole new Yong-Le Encyclopedia per year

This is an expected pattern for growth: the entire curve is typically a "sigmoid" (so named, because it is "S-shaped"), with an initial period of exponential growth when there is no retarding force whatsoever, followed by linear growth, and finally an asymptotic taper as the phenomenon runs into environmental limits. Thus far Wikipedia appears to have exhausted the potential for rapidly increasing labor and has already picked all of the "low-hanging fruit" of encyclopedic entries. Now, it is moving into a phase of growth represented primarily by the effort of the existing interested "Wikipedians" (now a fairly stable population, with growth balanced by attrition). Thus the growth rate now represents a fairly constant effort put into improving the encyclopedia. Also, evidence suggests that maintenance and quality-control now represent a much larger fraction of the work as more edits are now dedicated to revisions (and reversions) of existing pages rather than adding new ones. There is also, of course, continuing exponential growth among the less-well-represented languages in Wikipedia, which contributes to the total growth.

Quantity and quality

Of course, if Wikipedia is, as some have suggested, just an "enormous pile of rumors", then the size is not necessarily a good thing. But in fact Wikipedia is surprisingly accurate. A Nature study in 2005 demonstrated that in the area of science, Wikipedia was only slightly less accurate than Britannica, though it found a number of mistakes in both publications[1]. It is interesting to note that all of the articles they objected to have already been edited to fix the problems, while the same cannot be said for Britannica, since it is harder to change.

There are many areas of knowledge which Wikipedia covers, such as popular culture, which other encyclopedias cannot possibly hope to keep up with (try looking up episode summaries for Buffy the Vampire Slayer in Britannica!). It is understandably particularly complete in computer science and software subject areas.

Probably the weakest thing about Wikipedia is its susceptibility to intentional bias: many individuals, organizations, and governments have been known to edit Wikipedia articles to put themselves in a more favorable light. On the other hand, critical organizations may edit them to be more harsh, and in the end, these effects appear to balance out for all but the most controversial topics. Even there, we have to acknowledge that Wikipedia's coverage fairly depicts controversial topics in all of their controversy (try looking up Evolution, Creationism, and George W. Bush in Wikipedia for interesting examples of what happens with controversial topics).

A study at Dartmouth concluded that anonymous contributors improved articles roughly as much as signed-in users

These weaknesses describe what might be dubbed the "editorial bias" of Wikipedia, which represents the collective bias of the society of people willing to contribute to the project. It has to be remembered, though, that conventional encyclopedic works are also subject to editorial bias, and usually the bias of one organization. As it stands, researchers using Wikipedia have to take the same kind of critical approach that they've always applied to encyclopedias as sources of information, and they must follow up the sources themselves for serious scholarly work.

Although there has always been a concern with the problems caused by intentional vandalism—especially by anonymous contributors, this is not as much of a problem as many would imagine. A study at Dartmouth concluded that anonymous contributors improved articles roughly as much as signed-in users. Thus, it appears likely that the Delphi effect is out-competing vandalism and intentional bias in Wikipedia. In other words, distributed community-based editorial review works, just as distributed debugging does for free software. Biases and judgement calls are a problem, but in the end they appear to balance out for almost all articles.

Project Gutenberg

Started in 1971, Project Gutenberg is the grand-daddy of free culture projects. It predates much of the thought about the "intellectual commons" and it came thirteen years before the GNU Manifesto was written. As such it does not reflect modern ideas about free-licensing, and instead focuses on public domain works. That, along with the insistence on "plain text" representations of the works included reflect attitudes some may regard as dated. This situation has been mollified somewhat in recent years.

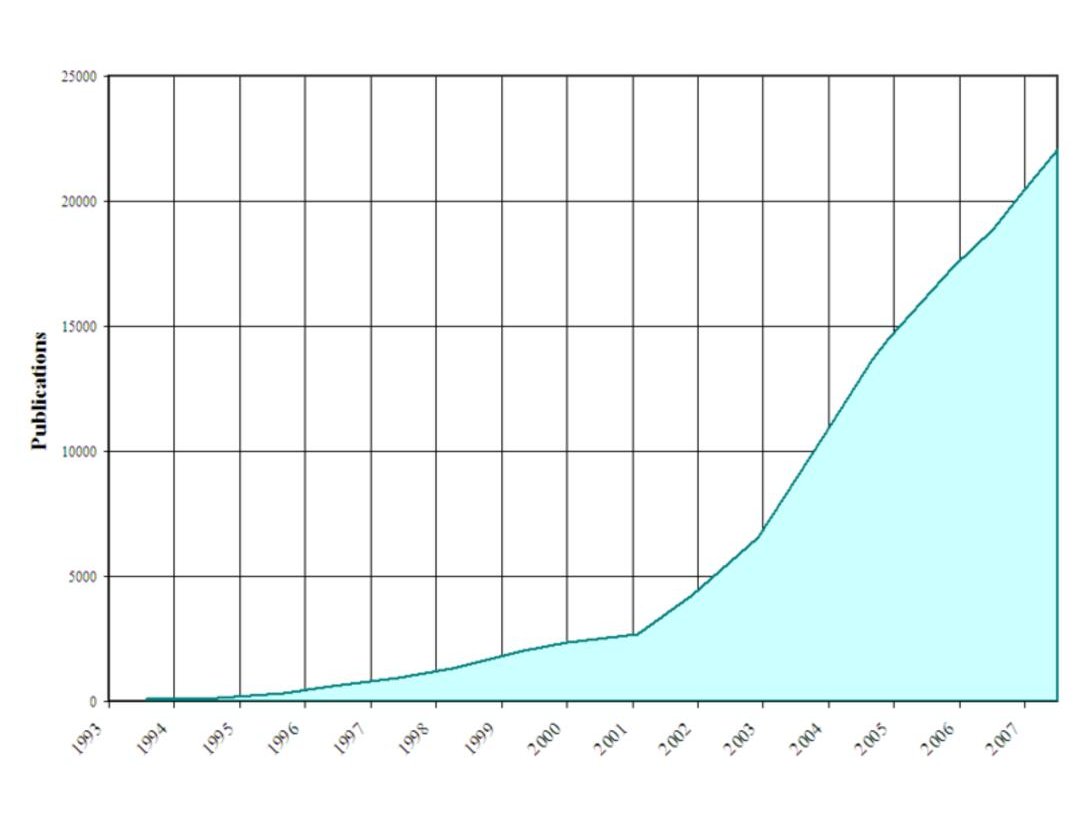

Project Gutenberg measures its size in terms of numbers of e-texts, which can be somewhat confusing since e-texts are of many different lengths. However, a rough estimate of the size of the repository in number of words suggests that it probably is now larger than the fabled Library of Alexandria [2].

The size of Project Gutenberg today is probably more limited by the availability of public domain works than by the labor pool willing to digitize them

The collection started fairly small, limited by the relatively small amount of networking and human labor available to the project in its early years. This behavior offers no serious challenge to the conventional wisdom about projects of this type.

However, as the internet and the web matured, so did the community supporting Project Gutenberg. Today, there is a significant volunteer scanning and distributed proof-reading effort going on which has accounted for the tremendous growth that the project has seen over the last decade or so.

The size of Project Gutenberg today is probably more limited by the availability of public domain works than by the labor pool willing to digitize them. The public domain has been starved multiple times in the last few decades by copyright term extensions which have effectively frozen the public domain in the mid 1920s. As more works do move into the public domain, Gutenberg will certainly be capable of capturing them.

The sheer scale of the thing

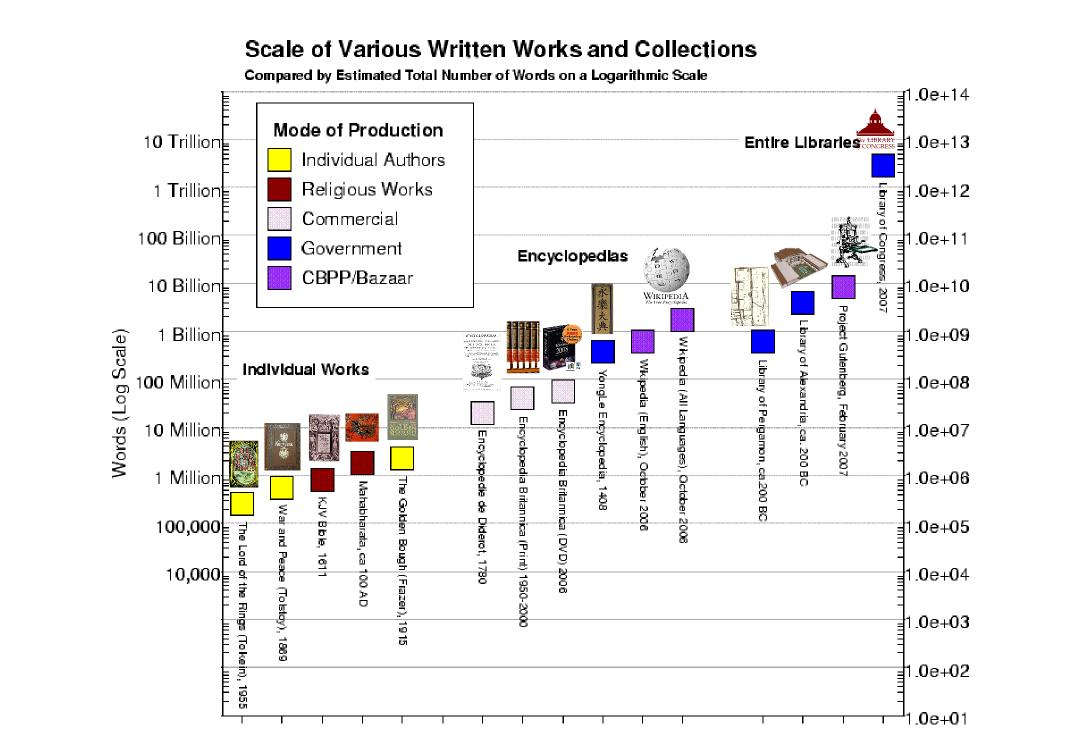

The size of Wikipedia and Project Gutenberg present serious challenges to our understanding and to the relative scales of these works compared to the great works of individuals, corporations, governments. As a means of grounding our perception in reality, it is useful to construct a logarithmic chart, spanning many orders of magnitude. Such a chart is not useful for making fine comparisons (because even a factor of two difference between two objects can seem quite close on a log chart), although by the same token, it's quite forgiving with respect to estimation errors, so we can afford to be fairly daring in our estimation process. What it is useful for is giving us an idea of what sort of things we ought to be comparing to.

The greatest modern library is probably the US Library of Congress, and indeed, it is unsurprisingly several orders of magnitude larger than Project Gutenberg. But there are two caveats to consider: One is that whereas the Library of Congress contains every work which has a copyright registered in the United States (because submitting a copy to the library is a part of the registration process), while Project Gutenberg is limited (almost entirely) to those works whose copyrights have expired. The other is that the figure for the Library of Congress is a rough estimate of the size of its print collection (which may contain a lot of repetition due to multiple editions), while Project Gutenberg is strictly an electronic digital imaging project. It would be interesting to compare the output of Project Gutenberg to government-sponsored digitization projects, which would be a much more fair comparison. Yet is something to imagine that Project Gutenberg now exceeds the probable size of the Great Library of Alexandria, which remains legendary to this day.

In a few short years, a new player—the Commons Based Enterprise—has far out-produced some of the greatest works of both corporations and governments

It's especially hard, though, to look at this chart and not be a little stunned by Wikipedia! The greatest encyclopedic work of corporate production is probably the Encyclopedia Britannica, yet it falls far behind in this comparison (by well over an order of magnitude!). The greatest encyclopedic work of government production was the Yong-Le Encyclopedia commissioned by the Emperor of China in 1403. Yet even that is several times smaller than the whole of Wikipedia (note that the Wikipedia numbers are the last reliable numbers from 2006, not the later estimates—Wikipedia is considerably larger today).

Our conventional wisdom is that the most powerfully productive organizations are corporations and governments: institutions we hold in awe, revere, and fear. But in a few short years, a new player—the Commons Based Enterprise—has far out-produced some of the greatest works of both corporations and governments (at least in the area of encyclopedias).

Clearly, the conventional wisdom needs adjusting.

——

1(http://www.nature.com/doifinder/10.1038/438900a). Jim Giles. Nature 438, 900 - 901 (2005).

[2] This statement is difficult to test because no one really knows exactly how big the Library of Alexandria was, and there are estimates that are probably huge exaggerations. However, based on the most reliable estimates I could find, Project Gutenberg is now larger. The Library of Alexandria was measured in numbers of scrolls, but it turns out that scrolls were generally somewhat shorter than books (and therefore than the typical e-texts in Project Gutenberg), but both can be estimated in terms of number of words, to make comparisons possible.